Evaluating your customized AI tool

Disclaimer: The thoughts and ideas presented in this course are not to be substituted for legal or ethical advice and are only meant to give you a starting point for gathering information about AI policy and regulations to consider.

Learning objectives:

- Understand the motivation behind evaluating your customized AI tool

- Define your own goals for evaluating the accuracy, computational efficiency, and usability of your AI tool

- Recognize metrics that could be used for evaluation of accuracy, computational efficiency, and usability.

Intro

Evaluating a software tool is critical. This is for multiple reasons that feed into each other.

- Evaluating your AI tool will help identify areas for improvement

- Evaluating your AI tool will demonstrate value to funders so you can actually make those improvements

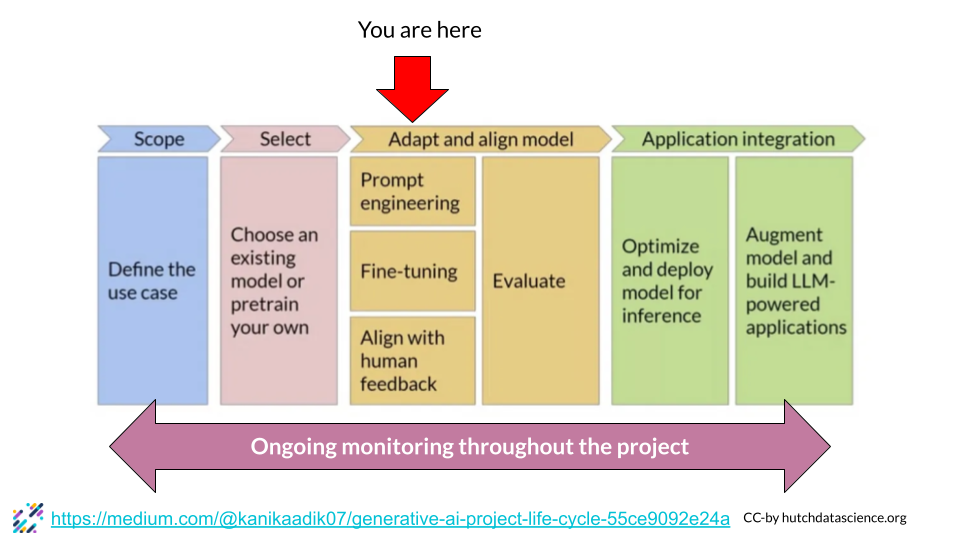

It’s important to keep the pulse on your project as it is developing. Ideally, you should be monitoring your eh I’s performance when it comes to bias ad performance throughout the project. But once you have a stable ah I tool, it is an especially good time to gather more evaluations.

As a reminder, generally a good AI tool is accurate in that it gives output that is useful. It is also computationally efficient in that we won’t be able to actually deploy the tool if it is computationally costly or takes too long to run a query.

But for the purposes of evaluation, we’re going to add one more point of evaluation which is a good AI tool is usable. Even if you do not have “users” in the traditional sense; you are designing your tool only for within your team or organization, you will still need it to be functionally usable by the individuals you have intended to use it. Otherwise the fact that it is accurate and computationally efficient will be irrelevant if no one can experience that accuracy and efficiency.

Evaluating Accuracy of an AI model

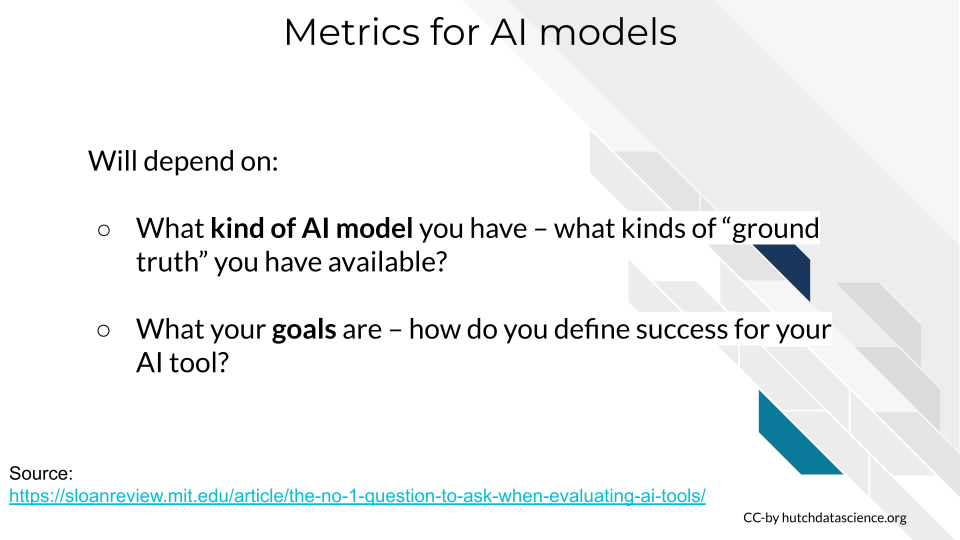

How you evaluate the accuracy of your AI model will be highly dependent on what kind of AI model you have – text to speech, text to image, large language model chatbot, a classifier, etc. This will determine what kinds of “ground truth” you have available. For a speech to text model for example, what was the speaker actually saying? What percentage of the words did the AI tool translate correctly to text?

Secondly your evaluation strategies will dependent on what your goals are – how do you define success for your AI tool? What was your original goals for this AI tool? Are they meeting those goals?

LLM chatbots can be a bit tricky to evaluate accuracy – how do you know if the response it gave a user was what the user was looking for? But there’s a number of options and groups who are working on establishing methods and standards for LLM evaluation.

Some examples at this time:

- MOdel kNowledge relIabiliTy scORe (MONITOR)

- google/BIG-bench

- GLUE Benchmark

- Measuring Massive Multitask Language Understanding

Evaluating Computational Efficiency of an AI model

Evaluating computational efficiency is important not only for the amount of time it takes to get useful output from your AI tool, but also will influence your computing bills each month. As mentioned previously, you’ll want to strike a balance between having an efficient but also accurate AI tool.

Besides being shocked by your computing bill each month, there’s more fore thinking ways you can keep tabs on your computational efficiency.

Examples of metrics you may consider collecting:

- Average time per job - How much time

- Capacity - Total jobs that can be run at once

- FLOPs (Floating Point Operations) - measure the computational cost or complexity of a model or calculation More about FLOPs.

Evaluating Usability of an AI model

Usability and user experience (UX) experts are highly valuable to have on staff. But whether or not you have the funds for an expert is UX to be on staff, more informal user testing is more helpful than no testing at all!

Here’s a very quick overview of what a usability testing workflow might look like:

- Decide what features of your AI tool you’d like to get feedback on

- Recruit and compensate participants

- Write a script for usability testing - always need to emphasize that if they the participant doesn’t know how to do something it is not their fault, its something that needs to be fixed with the tool!

- Watch 3 - 5 people try to do the task – often 3 is enough to illuminate a lot of problems to be fixed!

- Observe and take notes on what was tricky

- Ask participants questions!

We recommend reading this great article about user testing or reading more from this Documentation and Usability course.

There’s many ways to obtain user feedback, and surveys, and interface analytics.

Some examples of metrics you may want to collect:

Some examples of metrics you may want to collect:

- Success rate - how many users were able to successfully complete the task?

- Task time - how long does it take them to do

- Net Promoter Score (NPS) - scale of 0 - 10 summarized stat to understand what percentage of users would actively recommend your tool to others.

- Qualitative data and surveys - don’t underestimate the power of asking people their thoughts!