Ethical process

The concepts for ethical AI use are still highly debated as this is a rapidly evolving field. However, it is becoming apparent based on real-world situations that ethical consideration should occur in every stage of the process of use and development.

Disclaimer: The thoughts and ideas presented in this course are not to be substituted for legal or ethical advice and are only meant to give you a starting point for gathering information about AI policy and regulations to consider.

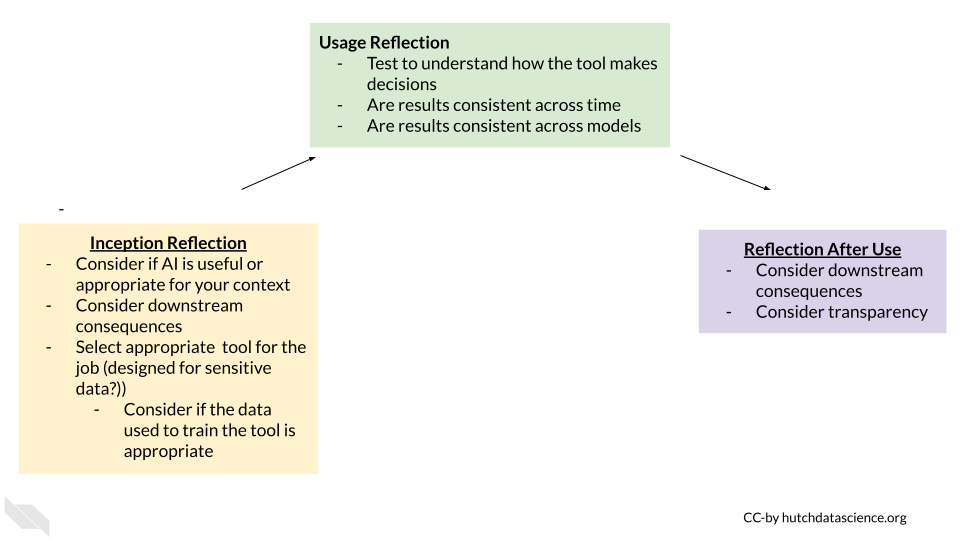

Ethical Use Process

Here is a proposed framework for using AI more ethically. This should involve active consideration at three stages: the inception of an idea, during usage, and after usage.

Reflection during inception of the idea

Consider if AI is actually needed and appropriate for the potential use. Consider the possible downstream consequences of use.

Consider the following questions:

- What could happen if the AI system worked poorly?

- Are tools mature enough for your specific use?

- Should you start smaller?

- Do you need a tool that is designed for sensitive data?

- Are the tools you are considering well-made for the job with transparency about how the tool works?

- Are the training sets for the tools appropriate for your use to avoid issues like bias and faulty responses?

Reflection during use

While using AI tools consider the following:

- Ask the tool how it is making decisions

- Evaluate the validity of the results

- Test for bias by asking bias related prompts

- Test if the results are consistent across time

- Test if the results are consistent across tools

Reflection after use

After using AI tools consider the following questions:

- How can you be transparent about how you used the tool so that others can better understand how you created content or made decisions?

- What might the downstream consequences be of your use, should you actually use the responses or were they not accurate enough, are there remaining concerns of bias?

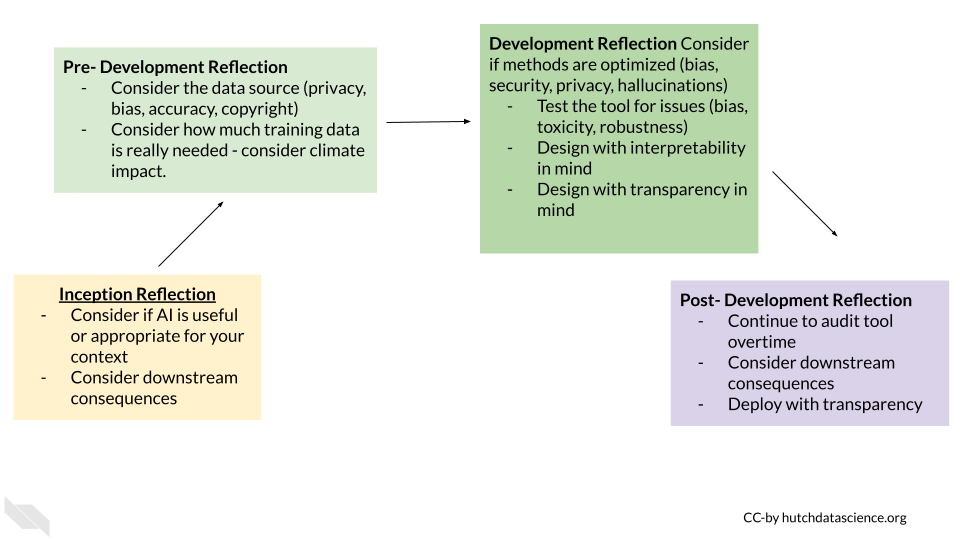

Ethical Development Process

Here is a proposed framework for developing AI tools more responsibly. This should involve active consideration at four stages: the inception of an idea, pre-development planning, during development, and after development.

Reflection during inception of the idea

Consider if AI is actually needed and appropriate for the potential use. Consider the possible downstream consequences of development.

Consider the following questions:

- What could happen if the AI system worked poorly?

- How might people use the tool for other unintended uses?

- Can you start smaller and build on your idea over time?

Planning Reflections

While you are planning to develop consider the following:

- Do you have appropriate training data to avoid issues like bias and faulty responses?

- Are the rights of any individuals violated by you using that data?

- Do you need to develop a tool that is designed for sensitive data? How might you protect that data?

- How large does your data really need to be - how can you avoid using unnecessary resources to train your model?

Development Reflection

While actively developing an AI tool, consider the following:

- Make design decisions based on best practices for avoiding bias

- Make design decisions based on best practices for protecting data and securing the system

- Consider how interpretable the results might be given the methods you are trying

- Test the tool as you develop for bias, toxic or harmful responses, inaccuracy, or inconsistency

- Can you design your tool in a way that supports transparency, perhaps generate logs about usage for users

Post-development Reflection

Consider the following after developing an AI tool:

- Continual auditing is needed to make sure no unexpected behavior occurs, that the responses are adequately interpretable, accurate, and not harmful especially with new data, new uses, or updates

- Consider how others might use or be using the tool for alternative usage

- Deploy your tool with adequate transparency about how the tool works, how it was made, and who to contact if there are issues

Summary

In summary, to use and develop AI ethically consideration for impact should be occur across the entire process from the stage of forming an idea, to planning, to active use or development, and afterwards. We hope these frameworks help you to consider your AI use and development more responsibly.

–>