Chapter 11 Sharing Data

11.1 Data sharing is important!

Sharing data is critical for optimizing the advancement of scientific understanding. Now that labs all over the world are producing massive amounts of data, there are many discoveries that can be made by just using existing data.

There are so many excellent reasons to put your data in a repository whether or not a journal requires it:

Sharing your data…

- Makes your project more transparent and thus more likely to be trusted and cited. In fact one study found that articles with links to the data used (in a repository) were cited more than articles without such information or other forms of data sharing (colavizza_citation_2020?).

- Helps your relieve your own workload so your email inbox isn’t loaded by requests you probably don’t have time to respond to.

- Allows others to gain even more insights from your data which shows funders that your data will be used to its maximum potential.

- It also provides more opportunities for others to replicate your results, which could help advance not only your career, but our understanding of science and medicine.

11.2 Benefits of data sharing

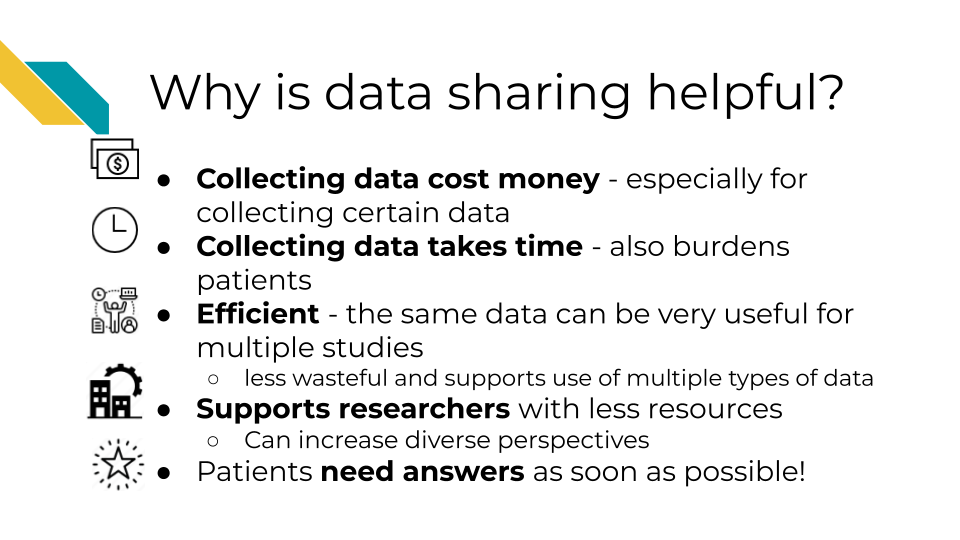

In addition to these benefits to yourself, data sharing has other far reaching benefits. It can help support faster advances in science and medicine, by reducing the need to collect new data, which reduces costs, time and effort, including the effort and burden placed on patients or research participants for data collection.

It also helps support researchers at institutes that do not have as many resources to collect data.

Ultimately it can therefore help patients benefit from research faster, as faster advances can be made through more efficient research.

See this description of additional reasons why sharing data is helpful for scientific advancement. ## Data repositories

The best way to share your data is by putting it somewhere that others can download it (and it can be kept private when necessary). There are many repositories out there that handle this for you. We recommend checking out our course on the NIH data sharing policy which describes many important resources for finding appropriate repositories for different types of data. These tools can even be helpful for research that is not related to health.

The repository you choose for sharing data will be highly dependent on the field you work in and the data type that you work with. Do your best to try to understand what the standard practice is for your field.

For a longer list of repositories, we also advise consulting this guide on data repositories published by Nature.

11.2.1 Repositories for journal articles

If your data doesn’t fit a standard recommended repository, such as GEO for genomic data for example, large datasets can be shared using one of the following repositories. Note that some journals or funding agencies may have specific requirements.

11.3 Data Submission tips

Uploading a dataset to a data repository is a great step toward sharing your data! But, if the dataset uploaded is unclear and unusable it might as well not been uploaded in the first place.

Keep in mind that although you may understand the ins and outs of your dataset and project, it is likely that others who look at your data may not.

To make your data truly shared, you need to take the time to make sure it is well-organized and well-described! There are two files you should make sure to include to help describe and organize your data project:

- A main README file that orients others to what is included in your data.

- A central download script that downloads the data in a way that is ready for re-analysis. See an example here.

- A metadata file that describes what data are included, and how the data files (if more than one) are connected.

11.3.1 Use consistent and clear names

- Make sure that sample and data IDs used are consistent across the project - make sure to include a metadata file that describes your samples in a way that is clear to those who might not have any prior knowledge of the project.

- Sample and data IDs should be consistent with any standardized formatting used in the field.

- Features names should avoid using genomic coordinates as these may change with new genome versions.

11.3.2 Make your project reproducible

Reproducible projects are able to be re-run by others to obtain the same results.

The main requirements for a reproducible project are:

- The data can be freely obtained from a repository (this maybe summarized data for the purposes of data privacy).

- The code can be freely obtained from GitHub (or another similar repository).

- The software versions used to obtain the results are made clear by documentation or providing a Docker container (more advanced option).

- The code and data are well described and organized with a system that is consistent.

Check out our introductory reproducibility course, advanced reproducibility course, and our course on containers for more information.

11.3.3 Have someone else review your code and data!

The best way to find out if your data are useable by others is to have someone else look over your code and data! There are so many little details that go into your data and projects. Those details can easily lead to typos and errors upon data submission that can cause confusion when others (or your future self) are attempting to use that data. The best way to test if your data project is usable is to have someone else (who has not prepared the data) try to make sense of it.

For more details on how to make data and code reproducible tips, see our Intro to Reproducibility course.

11.4 Why metadata is important

Metadata are critically important descriptive information about your data.

Without metadata, the data themselves are useless or at best vastly limited.

Metadata describe how your data came to be, what organism or patient the data are from and include any and every relevant piece of information about the samples in your dataset.

At this time it’s important to note that if you work with human data or samples, your metadata will likely contain personal identifiable information (PII) and protected health information (PHI). It’s critical that you protect this information! For more details on this, we encourage you to see our course about data management.

11.5 How to create metadata?

Where do these metadata come from? The notes and experimental design from anyone who played a part in collecting or processing the data and its original samples. If this includes you (meaning you have collected data and need to create metadata) let’s discuss how metadata can be made in the most useful and reproducible manner.

11.5.1 The goals in creating your metadata:

11.5.1.1 Goal A: Make it crystal clear and easily readable by both humans and computers!

Some examples of how to make your data crystal clear: - Look out for typos and spelling errors! - Don’t use acronyms unless necessary. If necessary, make sure to explain what the acronym means. - Don’t add extraneous information. For example, perhaps items that are relevant to your lab internally, but not meaningful to people outside of your lab. Either explain the significance of such information or leave it out. It is however, good to keep a record of such information for your lab elsewhere.

- Make your data tidy. > Tidy data is a standard way of mapping the meaning of a dataset to its structure. In tidy data: > - Every column is a variable. > - Every row is an observation. > - Every cell is a single value.

11.5.1.2 Goal B: Avoid introducing errors into your metadata in the future!

To help avoid future metadata errors, check out this excellent article discussing metadata design by Broman & Woo. We will very briefly cover the major points here but highly suggest you read the original article.

Be Consistent - Whatever labels and systems you choose, use it universally. This not only means in your metadata spreadsheet but also anywhere you are discussing your metadata variables.

Choose good names for things - avoid spaces, special characters, or unusual and undescribed jargon.

Write Dates as YYYY-MM-DD - this is a global standard and less likely to be messed up by Microsoft Excel.

No Empty Cells - If a particular field is not applicable to a sample, you can put

NAbut empty cells can lead to formatting errors or just general confusion.Put Just One Thing in a Cell - resist the urge to combine variables into one, you have no limit on the number of metadata variables you can make!

Make it a Rectangle - This is the easiest way to read data, for a computer and a human. Have your samples be the rows and variables be columns.

Create a Data Dictionary - Have a document where you describe what your metadata means in detailed paragraphs.

No Calculations in the Raw Data Files - To avoid mishaps, you should always keep a clean, original, raw version of your metadata that you do not add extra calculations or notes to.

Do Not Use Font Color or Highlighting as Data - This only adds to confusion to others if they don’t understand your color coding scheme. In addition not all data software and programming languages can interpret color. Instead create a new variable for anything you might be tempted to color code.

Make Backups - Metadata are critical, you never want to lose them because of spilled coffee on a computer. Keep the original backed up in a multiple places. If your data does not contain private information, we recommend writing your metadata in something like GoogleSheets because it is both free and also saved online so that it is safe from computer crashes. Check out this description of strategies for data for keeping data resilient in case you use a server or a cloud option to store your data.

Use Data Validation to Avoid Errors - set data types and have unit tests check whether data types are what you expect for a given variable. In an upcoming chapter we will discuss how to set up tests like this using

testthatR package.