Chapter 5 Data Ethics

Now that we have covered the basics of data management, we will take a moment to consider and reflect on the implications of our use and sharing of data.

5.1 What is data ethics?

Data ethics involves the consideration of:

- data collection

- data security

- data privacy

- data maintenance

- data sharing

It also involves mindfulness about how our research can ultimately impact (or not impact as the case may be for research that lacks inclusivity and equity) research participants and other individuals.

Importantly, we do not yet have established societal norms or protocols for every aspect of medical research, particularly with respect to new types of data and new technologies, and many topics are still under debate especially when it comes to cutting edge research.

However, general principles of ethics can be helpful and involve practices for research integrity, consideration for social justice, and transparency. Health care and research ethics can also be helpful in evaluating practices for data management and use.

5.1.1 Before and after research

Data ethics requires thoughtfulness both throughout the planning and research process to produce research that benefits society and does as little harm as possible, as well as mindfulness for what happens after the research is complete and published.

Researchers need to consider how their work will resolve unanswered questions and who the research might help, as well as consider how others might use or misuse their data, code, and results in the future (Lipworth, Mason, and Kerridge 2017; Teoli and Ghassemzadeh 2021).

5.1.2 Considerations before

Ethical research should involve consideration of how data should be collected, so that certain individuals are not left out of reaping the benefits of important research. For example, women, non-binary individuals, disabled individuals, and people of certain ethnic backgrounds, and intersections of various demographic factors have been historically left out of clinical trials or when included, their data was inadequately recorded (Clark et al. 2019). For example, clinical trials often have questions about sex or gender with limited binary options (overlooking people without a binary sex and non-binary gendered individuals) resulting in a lack of collection of important information that could impact clinical outcomes, research results, and communication about results (Chen et al. 2019).

Beyond this, even basic studies have historically often neglected to evaluate female animal models which can provide a greater understanding of how the research may successfully translate to more individuals. Yet another example is the historical lack of diversity in genomic reference datasets. To learn more about how social injustice, sexism, and other societal aspects have influenced bioethical and therefore data ethics practices, see Farmer (2004).

5.2 After Considerations

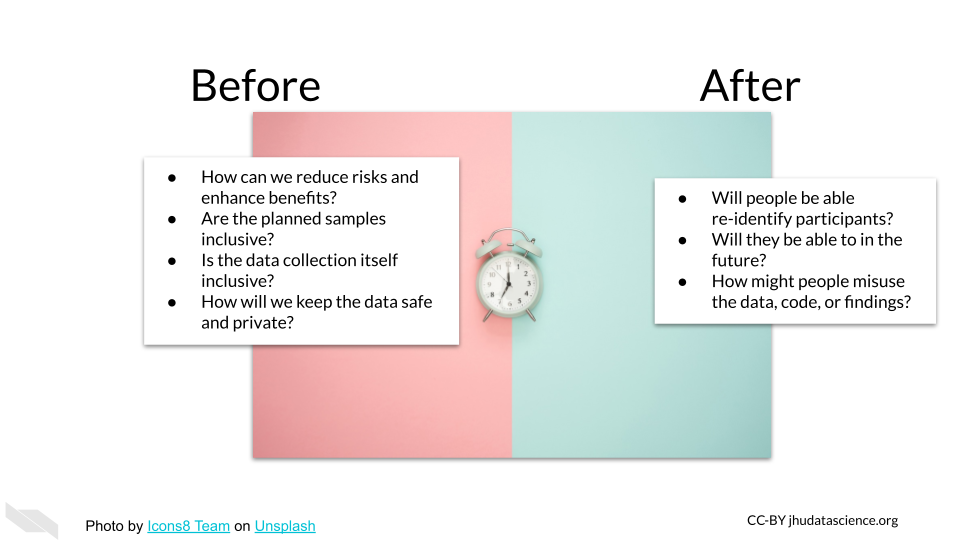

While data sharing can result in wonderful opportunities for secondary analysis, we need to also consider some of the harm that could be caused by sharing our data and make sure that we do it mindfully. With more advanced forms of genomic and imaging technology, and increased use of data from our phones we have much more information (including real-time data) about the subjects we are using, and thus the risk to our subject from the consequences of others identifying the subjects in our studies is much higher than it used to be (Byrd et al. 2020).

Overall there is a continuum of risk across the various types of data that we as researchers collect. Wile some forms of data, such as that derived from model organisms pose essentially no risk, intermediate forms of data such as summarized counts across a set of human samples pose more risk, while raw data and in particular data from individuals such as whole genome sequencing data, pose great risk for identification (Byrd et al. 2020).

Note that recent technology advances in AI, show that chest X-ray images can now re-identify individuals (Packhäuser et al. (2022)). In addition, some histopathology images are also re-identifiable, see Ganz et al. (2025) for guidance about how to share images more safely.

By the time you read these suggestions, they may be out-of-date or they may not be in alignment with institutional regulations, so please consult with experts at your organization.

5.2.1 Why does it mater that research subjects might be identifiable to others?

In some cases open awareness about patients with certain types of cancers or diseases can be useful to allow other researchers and patients to find these individuals to encourage additional research and patient support group participation (especially for rare diseases or conditions).

However, such information can put these individuals at risk for difficulty with insurance and employment, as well as at risk for other forms of discrimination. Furthermore, research data often also contains basic information about individuals, such as their address, which can be potentially deleterious for the safety of those individuals. New forms of research data from apps on our phone such as social media data collection, can pose more complicated risks based on data collection about the behaviors of research participants (Seh et al. 2020).

Beyond the risk that data breaches pose to research participants, such breaches also cause harm to the research institutes where the breach occurred. Reputations and funding opportunities can be greatly compromised. Transparency and/or informed consent are discussed below as ways to mitigate these risks.

5.2.2 Why else does data protection matter at the individual level?

If data gets manipulated or corrupted, this can result in false research findings, altered treatment plans by physicians, and more Seh et al. (2020).

If patients are concerned that information will be used against them, there is some evidence that they are less likely to be forthcoming and honest with their providers. This poses concerns for data quality as well as trust in clinicians and health systems (Nong et al. 2022).

Perpetuation of inequity is often cyclical. Considerations before research shape our options after research. For example, if people are excluded from the research process, data models are more likely to be biased against those populations.

We will discuss what can be done to reduce the risks of research participants and others from your research.

5.3 Data ethics history

To have an understanding of current theories about how to best deal with our research ethic conundrums it is helpful to be aware of the history of biomedical research in general.

Most regulations of biomedical research stem from historical ill treatment of research participants. This has taken many forms from outright ill intent, to much more well-intended but neglectful research practices often due to a lack of awareness of the potential consequences. This has been especially difficult more recently as our potential to create and share data has dramatically expanded.

5.3.1 Historical incidences:

Here are a couple of famous historical examples of medical research that were performed in a harmful manner. These incidences have shaped policy about ethical research, in terms of advocacy for informed consent (more on that in the next section), as well as recognition for the role of social injustice in research.

Tuskegee syphilis trial: A study in Tuskegee, Alabama about the outcomes of untreated syphilis in Black males (1932-1972) in which the patients were told they were being treated but were in fact not being treated (McVean 2019).

Henrietta Lacks and HeLa Cells: In 1951, a patient named Henrietta Lacks was treated for cervical cancer at Johns Hopkins. Her cancerous cells turned out to be uniquely capable of surviving and reproducing and have been used widely in research for decades for many discoveries. Her family did not receive money from the companies that profited from her cells, and for decades her family was often not asked for consent as doctors and scientists revealed her name and medical records publicly (“Henrietta Lacks: Science Must Right a Historical Wrong” (2020)).

See here for additional examples.

5.4 Principles of Bioethics

Several general concepts for Healthcare ethics, and by extension medical research ethics have been described in several commonly used ways, including the four pillars and the seven guiding principles. In the wake of medical and scientific abuses during WWII and beyond, several ethical principles and codes emerged. The Belmont Report (1979) defines the core bioethical pillars that drive ethical analysis in healthcare and research even today.

5.4.1 The four pillars (this discussion is from Melvin (2020)):

- The pillar of beneficence

This pillar means that healthcare and its research must support the well-being of the patients. This includes reducing pain and helping to increase their overall quality of life.

- The pillar of non-maleficence

Harm to patients must be minimized. This includes not causing intentional harm but also minimizing incompetency and accidental harm. This means for research studies and treatments, only conducting those where benefits outweigh the risks.

- The pillar of justice

Healthcare must be provided to all equally and equitably regardless of race, gender, sex, socioeconomic class, religion, ethnicity, sexual orientation, age, or any other aspect of an individual’s identity. Equity specifically means that those who are societally disadvantaged should be even further aided.

- The pillar of autonomy (self-determination)

Patients need to make their own decisions about their health. They should be equipped with all information about their health but then respected and supported in their decision. This is otherwise includes informed consent (Commissioner 2020), which means before a patient can truly consent, they need to be fully informed of the risks, ins and outs of any procedure, treatment, or research study participation.

5.4.2 The NIH Clinical Center Seven Principles

The NIH published its “Guiding Principles for Ethical Research” (2015), which stem from the four pillars described above to provide a framework for ensuring the protection of people who volunteer for clinical research.

1)Social and clinical value

The value of the study needs to be important enough to justify the discomfort, inconvenience that research participants may experience.

2)Scientific validity

Studies should be designed so as to effectively gain more understanding about the scientific question in consideration. In other words, the efforts of the research participants should not be wasted on a study that is poorly designed and will not add to scientific understanding.

3)Fair subject selection Participants for studies should be selected based on the scientific question. Groups of people should not be excluded without a good reason. Participants should benefit from the understanding that the study should gain. In other words participants should not accept risk for only the benefit of others.

4)Favorable risk-benefit ratio

Risks should be minimized, while benefits should be maximized. Risks are not just physical, but can involve mental, financial, social, or other risks.

5)Independent review

To reduce conflicts of interest influencing the care of research participants, study plans should be reviewed by others who are trained to consider the ethical implications of such studies.

6)Informed consent

Individuals should voluntarily decide if they wish to participate (where possible - some exceptions include very young children or those who are incapacitated “Informed Consent” (2023)). Participants should be informed about the risks (and potential uncertainty around those risks). This must be done in a way in which the individual can actually understand the information, for example it should be in a language that the individual understands. The information needs to be accurate and individuals should not feel coerced to participate.

7)Respect for potential and enrolled subjects

Individuals should be treated with respect for the entirety of the process including:

- respecting the privacy of their information

- respecting their right to change their mind, including providing them any new information about risks or benefits that might cause them to change their mind

- respecting their welfare and providing treatment if needed and removing individuals for their welfare if needed

- respecting their welfare and their right to knowledge by letting them know what was learned from the research

5.5 Ethical Principles for Data

These guidelines are also very useful for ensuring inclusive, transparent, open, and respectful data management practices:

CARE Principles for Indigenous Data Governance, which largely focus on the self-determination of indigenous people and the usage of their data, as well as consideration for the impact and purpose of data:

- C stands for: Collective Benefit

- A stands for: Authority to Control

- R stands for: Responsibility

- E stands for: Ethics

FAIR Principles aim to promote open data sharing:

- F stands for: Findable

- A stands for: Accessible

- I stands for: Interoperable

- R stands for: Reusable

It is encouraged to consider both the CARE and FAIR principles together.

5.6 Concept of Consent

We have already talked about the concept of informed consent. Obtaining consent should also include the following elements (based on “IOWA STATE UNIVERSITY Institutional Review BoardRecruitment of Research Participants” (2015) and “Informed Consent” (2023) and the author’s thoughts):

- Individuals should not feel pressured and should have adequate time to make the decision.

- Individuals should not experience undue influence, be coerced or be manipulated - They should not feel pressured by the individual recruiting, such as a boss or someone else of power or by offers in exchange for participation that would sway the decision.

- Individuals should not receive a misleading or overstated presentation of the potential benefits of the study.

- Individuals should be made aware of the uncertainty associated with risks and benefits

- Individuals should have the capacity to understand the risks and benefits (this involves consideration for language barriers, intellectual capacity, emotional capacity, stress, sleep loss and other forms of physical strain)

- Individuals should be able to withdraw consent at anytime

- Individuals should be respected throughout the process including consideration for the cultural values of the recruited populations

- Consent forms and processes should be reviewed by people with diverse expertise, such as understanding of ethics, equity, and patients and community experience

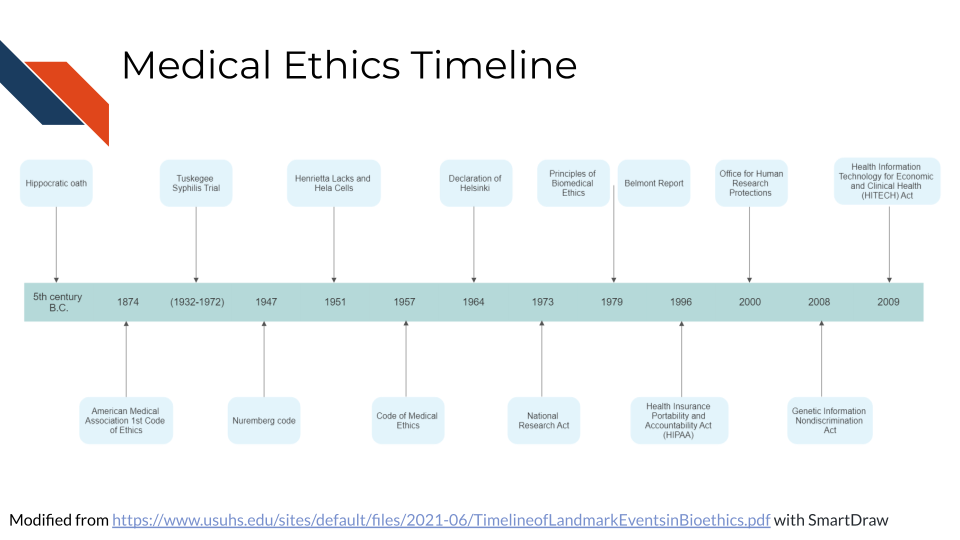

5.7 Medical Ethics Timeline

It is helpful to get a sense of the timing when society established ethical medical standards and laws. Here we will point out important events in the timeline of medical ethics, with an emphasis on the United States.

See here for a more in-depth timeline.

5.7.1 The Hippocratic Oath (~ 4th century BC)

The concept of medical ethics in the Western world dates back all the way to the original Hippocratic Oath between the 3rd and 5th century BC (“Hippocratic Oath” 2023). It established concepts like confidentiality and non-maleficence.

” What I may see or hear in the course of the treatment or even outside of the treatment in regard to the life of men, which on no account one must spread abroad, I will keep to myself, holding such things shameful to be spoken about.”(“Hippocratic Oath” 2023)

5.7.2 American Medical Association Code of Medical Ethics (1847)

The United States American Medical Association code of ethics was first established in 1874 (Riddick 2003). The next major code publication was in 1957. The code was not law, but it set standards for care. The 1957 code describe the fact that there might be special cases in which confidence might not always be able to be kept.

See here by Higgins (1989) and here by Moskop et al. (2005) for more about the history of medical ethics codes.

5.7.3 Declaration of Helsinki (1964)

The Declaration of Helsinki was published by the World Medical Association (WMA) and is considered “the world’s most widely recognized ethical principle for medical research involving humans” (Kurihara et al. 2024). It describes a set of principles for “medical research involving human subjects, including research on identifiable human material and data.” It has been amended several times and the WMA aims to keep it up to date.

It outlines that research subjects welfare is the priority, that they have a right to self determination and the right to informed consent. Risks and benefits should be carefully considered and research should be discontinued if risks are determined to be to high (Association 1964).

5.7.4 International Covenant on Civil and Political Rights (1966)

The United Nations adopted the concept of “free consent” (similar to informed consent) into international law (“Informed Consent” 2023).

5.7.5 Beecher’s Ethics and clinical research (1966)

In 1966, Henry Beecher published an article called “Ethics and clinical research”, outlining serious ethical issues in biomedical research at the time. This encouraged the creation of additional guidelines (Beecher 1966; Stark 2016).

5.7.6 The Belmont Report (1979)

The Belmont Report was written to describe guidelines for human subjects in biomedical and behavioral research. The report aims to provide a general framework for ethical consideration of research. It states that:

These principles cannot always be applied so as to resolve beyond dispute particular ethical problems. The objective is to provide an analytical framework that will guide the resolution of ethical problems arising from research involving human subjects (“The Belmont Report,” n.d.).

Here we briefly describe some of the major aspects of the report (“The Belmont Report,” n.d.).

There are 3 ethical principles defined:

- Respect for Persons

People should be allowed autonomy to use their judgment to make decisions for themselves. Those that cannot make all decisions for themselves, such as children or those who are incapacitated should be protected.

- Beneficence

Harm to human subjects should be minimized and benefits should be maximized.

- Justice

Benefits and burdens of research should be distributed equally.

Justice demands both that these not provide advantages only to those who can afford them and that such research should not unduly involve persons from groups unlikely to be among the beneficiaries of subsequent applications of the research (“The Belmont Report,” n.d.)

The application of these principles should involve the following:

- Informed Consent

Consent should involve: information, comprehension, and voluntariness.

- Assessment of Risks and Benefits

Potential risks and benefits should be thoroughly evaluated, including if human subjects are truly necessary.

Benefits and risks must be “balanced” and shown to be “in a favorable ratio.” (“The Belmont Report,” n.d.)

- Selection of Subjects

There must be fair procedures and outcomes in the selection of research subjects. Less burdened individuals should be called upon first to take on research burdens.

Individuals who might be in conditions where they might be utilized for research more readily (such as those who are incarcerated or institutionalized), should be protected.

5.7.7 Health Insurance Portability and Accountability Act (HIPAA) (1996)

Medical confidentially became law in the United States. Protected health information and identifiable health information must not be shared with anyone outside of certain covered entities without consent. Covered entities include: clinicians, insurance companies, and health care government agencies.

5.7.8 Genetic Information Nondiscrimination Act (GINA) (2008)

The Genetic Information Nondiscrimination Act prohibits employers and health insurance companies from using genetic information to discriminate against individuals.

5.7.9 Health Information Technology for Economic and Clinical Health (HITECH) Act (2009)

HITECH builds on HIPAA to add protections for electronic PHI as this became more common practice in health care with three basic new rules (Security 2021):

- Privacy Rule - Access should be limited to as few individuals as possible

- Security Rule - Safeguards should be implemented to protect electronic PHI, including technical means and physical means

- Breach Notification - Individuals should be notified in a timely manner about breaches that may have involved their PHI

5.8 Causes of research misconduct

A research study by Davis, Riske-Morris, and Diaz (2007) investigated closed cases from the Office of Research Integrity (ORI), using statements from the reports to evaluate reasons why researchers sometimes do not perform research responsibly.

A variety of reasons emerged that the researchers described including:

- Personal and professional stressors

- pressure to produce

- overworked

- insufficient time

- mental health issues

- overcommitted

- lack of support

- stress

- Organizational climate factors

- insufficient mentoring/supervision

- poor communication/coordination

- substandard lab procedures

- lost stolen data

- Job Insecurities

These cases described situations in which a researcher felt hesitant to ask for help due to a perception of job insecurity.

- Inappropriate responsibility

- Competition for position

- Language Barrier

- Rationalizations

- Lack of control over environment

- Disseminating findings too quickly

- Personal Inhibitions

- Difficult job/task

- Frustrations

- Another category of Rationalizations

- Fear

- Apathy/Dislike

- Personality traits

- Impatience

- Laziness

- Personal Need for Recognition

The article states that pressures to do public good can also be viewed as a personality factor since it seems indicative of dogma that can compromise the integrity of the scientific process, thus resulting in research misconduct.

While personality traits were identified, the above reasons suggest that if researchers have more support to recognize and overcome stressors and are motivated to do quality research instead of attempting to obtain metrics for promotion, perhaps some of these reasons can be mitigated.

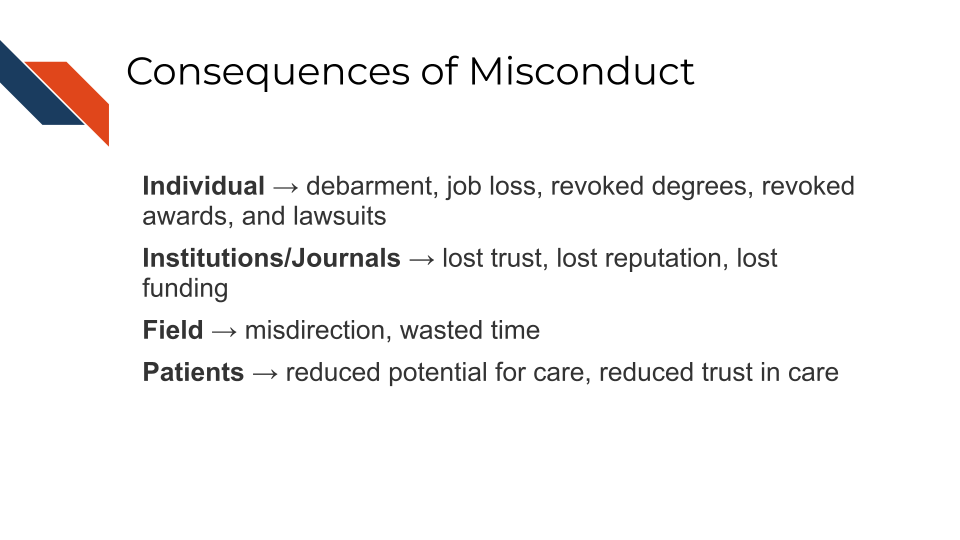

5.9 Consequences of research misconduct

Research misconduct either due to malicious intent or unintentional neglect can have far reaching consequences. This section is based on Davis, Riske-Morris, and Diaz (2007) and National Academies of Sciences et al. (2017).

5.9.1 The Researcher

According to a study of misconduct cases in the Office of Research Integrity (ORI), the investigator is always the accused and are scrutinized by their institution and federal agencies. If found guilty, they face many consequences including possible debarment, job loss, revoked degrees, revoked awards, and lawsuits .

5.9.2 The Institute and Journals

Institutes and Journals can also face consequences of research misconduct, as they face reputation loses, which may reduce future opportunities for additional funding or support.

5.10 Misconduct prevention

Several models have been proposed to reduce misconduct (Mousavi and Abdollahi 2020; Kumar 2010). They center on evaluating the pressures in academic evaluation and advancement and avoiding using simple quantitative metrics, such as the number of publications that a researcher publishes, with out more nuanced evaluation. They point out that a lack of experience or knowledge can also increase the risk of misconduct. It is suggested that the quality of research be prioritized over quantity. This would reduce pressures on academics to obtain flashy looking metrics and instead focus on doing work with the utmost integrity and rigor in a system where evaluations could better value these qualities.

5.11 Summary

In summary, we have covered the following concepts in this chapter:

- Data ethics involves the ethical collection, storage, sharing and use of data to minimize harm and maximize benefits to research participants and society.

- Data ethics considerations should happen both before and after research is conducted. Researchers need to consider how their data could be misused after publication.

- Historical examples of unethical research highlight the need for data ethics principles, like informed consent and respect for research participants. Examples include the Tuskegee Syphilis Study and the case of Henrietta Lacks.

- Key principles of data ethics include: beneficence, non-maleficence, justice, autonomy and informed consent.

- Medical ethics has evolved over time with milestones like the Hippocratic Oath, AMA code of ethics, Nuremberg Code and establishment of laws like HIPAA, GINA and HITECH.

- Research misconduct can happen due to personal, organizational and job related factors. It can negatively impact researchers, institutes, research fields, patients and public trust.

- Misconduct prevention involves prioritizing research quality over quantity and reducing pressures that promote misconduct. Providing mentoring, supervision and support can also help prevent misconduct.