Chapter 9 Optimization

In our last chapter, we will explore ways to optimize our completed workflow.

Generally, in computing, we are working with the following finite resources:

Computing time: a task can take milliseconds to days to complete. We find ways to reduce our computing time so that we have results sooner.

Central Processing Unit (CPU) usage: a task requires at least one CPU to be the “brain” of the computer to run computation, move data around, and access the memory. Tasks can make use of multiple CPUs to process in parallel.

Memory usage (RAM): a task needs a place to store data while computing on the job. Reducing the memory usage can allow the task to be more efficient.

Disk usage: A task usually requires some input data and have an output, and needs disk storage to store the information when the task isn’t running.

Changing usage of any of these resources often affect other resources. For instance, there’s often a tradeoff between computing time and memory usage. In order to be efficient with these finite resources, we will introduce some optimization methods that are common in building an efficient workflow, and provide an example on a simple optimization method, “Embarrassingly Parallel Scatter-Gather”.

9.1 Common optimizing/parallelizing methods

9.1.1 Memory optimization

To optimize the amount of memory used for a task, you can profile the task to understand how much memory is being used and where the bottlenecks are. Most programming languages have a large selection of memory profilers to analyze the performance of your code, and they can be starting points to consider how to use less memory. When a bottleneck is found, you might consider to use a more efficient algorithm to reduce memory usage. Or, perhaps you might use more optimal data structures, such as databases or sparse data structures, that better fit the data type of your problem.

Another common memory analysis is to understand how memory usage scales relative to the input for the task. For example, as you increase the VCF file size for a task, how much does the memory usage scale? Is there something you can do so that it scales with a smaller magnitude?

A technique you may want to consider if your memory usage scales with your input data size is that you could break down the input into smaller parts, and run your task on these smaller parts, each requesting smaller amount of memory. This wouldn’t reduce the total amount of memory you use, but many computing backends prefer many tasks using small amounts of a memory instead of a single job requiring a large amount of memory. This would also help optimizing computing time. Below, we look at different ways of parallelization.

9.1.2 Embarrassingly Parallel Scatter-Gather

A sub-task is called “Embarrassingly Parallel” if the sub-task can be run completely independent of other sub-tasks to finish the task. When we run multiple samples in our workflow, it is a form of “Embarrassingly Parallel”, because processing each tumor-normal pair through the workflow is done independently of other samples on separate computers (also known in high performance computing as “nodes”). This technique reduces the computing time and breaks down CPU and memory usage into smaller, more affordable resource requests. Later in this chapter we will show an detailed example of using this technique to split a BAM into individual chromosomes to run a resource demanding task.

9.1.4 Multiprocessing (Distributed-Memory Parallelism)

Sometimes, the scale of the work requires coordination of multiple computers working dependently of each other. This is called “multiprocessing”. This is common in working with large scale data, using tools such as Spark or Hadoop. We will not be talking about multiprocessing extensively in this guide.

9.1.5 Graphical Processing Units (GPUs)

Graphical processing units are an additional processing tool to CPUs, in which a different computer hardware is used. There are usually a few handful of CPUs on a computer, but there can be thousands of GPUs, each capable of doing a small and simple task quickly. GPUs are used for graphical displays, and scientific computing problems such as training neutral networks and solving differential equations. We will not be talking about GPUs extensively in this guide.

9.2 Scatter-Gather on chromosomes

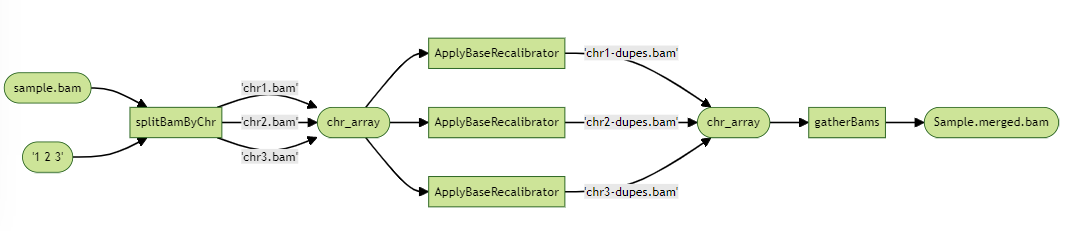

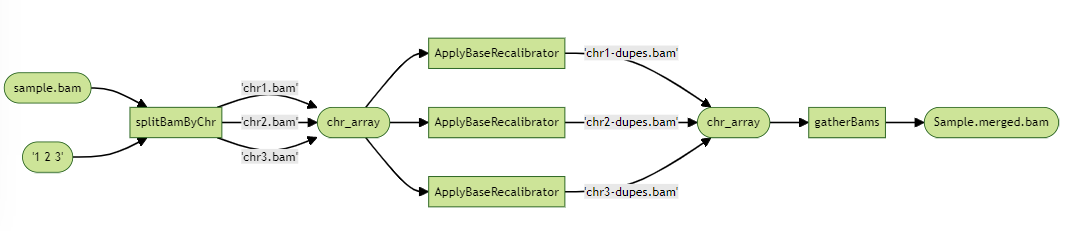

Suppose that one our tasks, ApplyBaseRecalibrator, is taking too long to run and taking on too much memory. We might first look into the tool’s documentation to see if there are ways use it more optimally, such as provide more CPUs, but we don’t see it. We turn to Embarrassingly Parallel Scatter-Gather, in which we split a BAM file by its chromosomes to create 23 BAM files, run ApplyBaseRecalibrator on each of these BAMs, and merge all of these calibrated BAM files together. This is called “Scatter-Gather” because we have to scatter our BAMs into smaller parts to run, and then gather them together in the end.

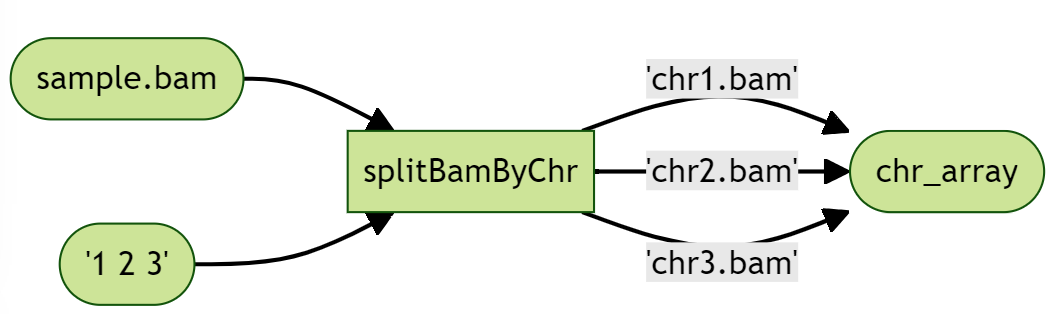

We first write a new task to split our BAM file, which takes the original BAM, an array of chromosomes to scatter, and outputs an array of Files of the smaller BAM files. The output array of Files is stored as bams and indexFiles, and illustrated in the diagram as “chr_array”.

# Split a BAM file by chromosomes

task splitBamByChr {

input {

File bamToSplit

File baiToSplit

Array[String] chromosomes

}

String baseFileName = basename(bamToSplit, ".bam")

command <<<

set -eo pipefail

#For each chromosome...

for x in ~{sep=' ' chromosomes}; do

outputFile="~{baseFileName}_${x}.bam"

samtools view -b -@ 3 "~{bamToSplit}" $x > $outputFile

samtools index $outputFile

done

# List all bam and bai files created

ls *.bam > bam_list.txt

ls *.bam.bai > bai_list.txt

>>>

output {

Array[File] bams = read_lines("bam_list.txt")

Array[File] indexFiles = read_lines("bai_list.txt")

}

runtime {

docker: "fredhutch/bwa:0.7.17"

cpu: 4

}

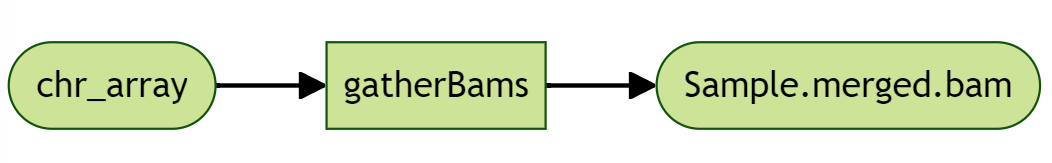

We also need a task to gather all the BAMs together, which takes in an array of Files, and outputs a merged BAM. The input array of Files is referred as bams, and illustrated in the diagram as chr_array.

#Gather an array of BAMs

task gatherBams {

input {

Array[File] bams

String clean_baseName_regex

}

String temp = basename(bams[0], ".bam")

String baseFileName = sub(temp, clean_baseName_regex, "")

command <<<

set -eo pipefail

samtools merge -c -@3 "~{baseFileName}".merged.bam ~{sep=' ' bams}

samtools index "~{baseFileName}".merged.bam

>>>

runtime {

cpu: 4

docker: "fredhutch/bwa:0.7.17"

}

output {

File merged_bam = "~{baseFileName}.merged.bam"

File merged_bai = "~{baseFileName}.merged.bam.bai"

}

}In our workflow, after MarkDuplicates task, we first use splitBamByChr to divvy up our BAM file, and then use a scatter over the output array of scattered BAMs to run ApplyBaseRecalibrator on each of the scatted BAMs. Finally, we take the output of ApplyBaseRecalibrator as an array to be merged together via gatherBams:

#Split by chromosomes

call splitBamByChr as tumorSplitBamByChr {

input:

bamToSplit = tumorMarkDuplicates.markDuplicates_bam,

baiToSplit = tumorMarkDuplicates.markDuplicates_bai,

chromosomes = chrs_to_split

}

#Scatter by chromosomes

scatter(i in range(length(tumorSplitBamByChr.indexFiles))) {

File tumorSubBam = tumorSplitBamByChr.bams[i]

File tumorSubBamIndex = tumorSplitBamByChr.indexFiles[i]

call ApplyBaseRecalibrator as tumorApplyBaseRecalibrator {

input:

input_bam = tumorSubBam,

input_bam_index = tumorSubBamIndex,

dbSNP_vcf = dbSNP_vcf,

dbSNP_vcf_index = dbSNP_vcf_index,

known_indels_sites_VCFs = known_indels_sites_VCFs,

known_indels_sites_indices = known_indels_sites_indices,

refGenome = refGenome

}

}

#Gather all chromosomes together

call gatherBams as tumorGatherBams {

input:

bams = tumorApplyBaseRecalibrator.recalibrated_bam,

clean_baseName_regex = ".duplicates_marked_12.recal"

}Our final workflow: